I can’t reproduce this:

for testing ,I inserted about 450k rows of text into a table on a 4 cores 4gb vps, then I did a search test for its full text search performance, finally I get a decent result about 1700 queries/ second,

however, when I ported exactly the same data to a 8 cores 16gb vps, the final search performance went down to 1200 queries / second

with the data and queries you provided. Here’s my load command and the results:

8 cores - ~ 360 qps:

root@8cores-32gb:~# (while true; do shuf -n 1 test_query.log; done) | pv -l | parallel --round-robin -j24 --progress "mysql -P9306 -h 0 -e {} > /dev/null"

...

local:24/25512/100%/0.0s 25.5k 0:01:10 [ 362 /s]

4 cores - ~185 qps:

root@4cores-16gb:~# (while true; do shuf -n 1 test_query.log; done) | pv -l | parallel --round-robin -j8 --progress "mysql -P9306 -h 0 -e {} > /dev/null"

...

local:8/35630/100%/0.0s 35.6k 0:03:01 [ 184 /s]

I changed nothing in your:

- config

- data

- queries

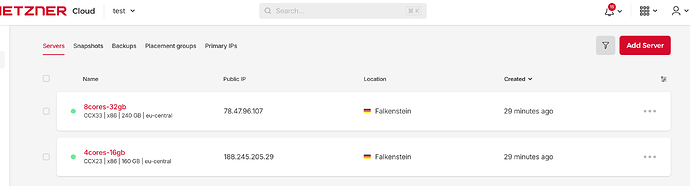

I used these VPSes in Hetzner with dedicated CPUs:

The OS I used was Debian 11.

Manticore version:

root@8cores-32gb:~# searchd -v

Manticore 6.3.9 dd29aca47@24120423 dev (columnar 2.3.1 edadc69@24112219) (secondary 2.3.1 edadc69@24112219) (knn 2.3.1 edadc69@24112219)

You mentioned “for its full text search performance” while the queries you provided are not full-text:

root@8cores-32gb:~# grep -i match test_query.log

root@8cores-32gb:~#

Perhaps if you provide different queries I can reproduce your issue.