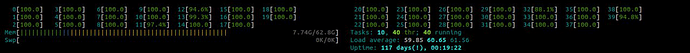

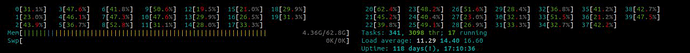

I have the same problem. System load gradually increases up to 50 (limited by thread count I suppose) because some queries hang. These queries are batch or simple, but what they have in common is that a filter is used on mva attribute.

The very simple queries like these hangs.

SELECT id FROM post WHERE MATCH('(@(tags,user_screen_name,text,user_name) video)') AND host_id = 5 LIMIT 0, 1 OPTION max_matches = 1

What’s interesting is that different search terms hang with some mva attribute values and work fine with others, and all the time with the same values, not randomly.

It is also interesting that queries stop hanging when there are few free threads left in the system.

Also unusual is that when I run queries manually, one at a time, from the mysql client, they hang, but when I test with mysqlslap with concurrency value = 1, they work fine and hang at higher concurrency values.

The command I run to give a load on manticore. I ran it several times until all the threads were clogged. The initial 4-5 attempts did not yield any results, but subsequent attempts were successful.

mysqlslap --verbose --query=/query/hang.log --port=9306 --host=manticore --concurrency=2 --number-of-queries=20 --detach=1 --delimiter="\n"

Mysqlslap with these queries works only with concurrency value = 1, and stucks if concurrency > 1

SELECT id,host_id FROM post WHERE MATCH('(@(tags,user_screen_name,text,user_name) video)') and any(host_id) = 7 LIMIT 0, 3000 OPTION max_matches = 3000;

SELECT id,host_id FROM post WHERE MATCH('(@(tags,user_screen_name,text,user_name) video)') and any(host_id) = 3 LIMIT 0, 3000 OPTION max_matches = 3000;

SELECT id,host_id FROM post WHERE MATCH('(@(tags,user_screen_name,text,user_name) tiktok)') and any(host_id) = 3 LIMIT 0, 3000 OPTION max_matches = 3000;

SELECT id,host_id FROM post WHERE MATCH('(@(tags,user_screen_name,text,user_name) tiktok)') and any(host_id) = 9 LIMIT 0, 3000 OPTION max_matches = 3000;

Mysqlslap with these queries works with any concurrency value

SELECT id,host_id FROM post WHERE MATCH('(@(tags,user_screen_name,text,user_name) video)') and any(host_id) = 7 LIMIT 0, 3000 OPTION max_matches = 3000;

SELECT id,host_id FROM post WHERE MATCH('(@(tags,user_screen_name,text,user_name) tiktok)') and any(host_id) = 3 LIMIT 0, 3000 OPTION max_matches = 3000;

The query log shows that the first 25 requests are processed successfully, but then the system becomes unresponsive until most of the threads are stucked and Manticore starts functioning (but stucked threads provides high system LA).

Testing without pseudo sharding works up to concurrency values no more then 24. But it should be different issue because I can’t see hung queries in threads in this scenario.

Also I noticed that the environment variable searchd_pseudo_sharding=0 does not work for the Docker container, although some other variables do. However, this is also a separate issue.

I use Manticore 6.2.12 dc5144d35@230822 (columnar 2.2.4 5aec342@230822) (secondary 2.2.4 5aec342@230822) on docker swarm.

I’ve attached the logs and some debug output at the link, but it would probably be better to create an issue on github.